- Efficiency

- MoE

- Inference

- Python

- Cuda

- Systems

- external-services

•

•

•

•

•

•

-

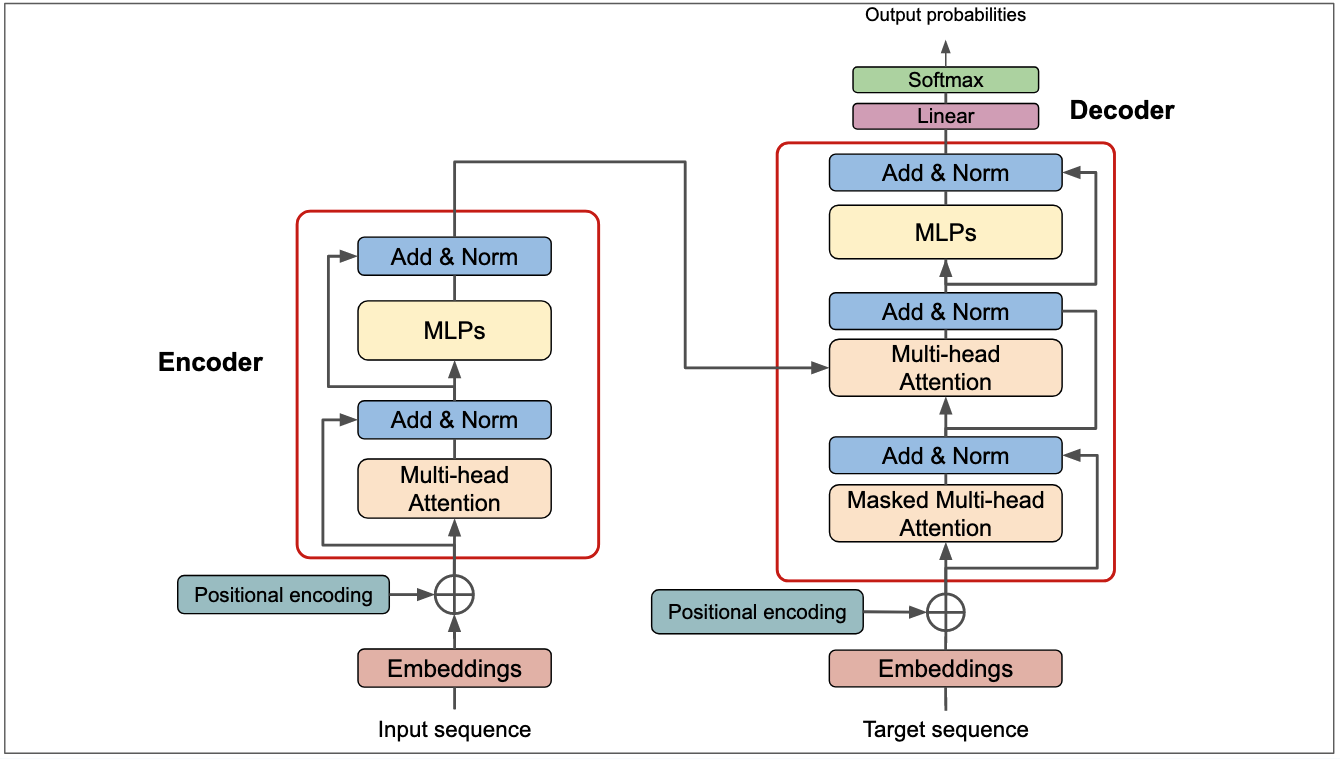

Understanding Transformers — The Architecture Behind Modern LLMs

A deep dive into the Transformer architecture — self-attention, multi-head attention, position encodings, and scaling laws.